Overview

Researchers at the University of Birmingham, part of the Wellcome Trust-funded Proton Radiotherapy Verification and Dosimetry Applications (PRaVDA) Consortium, are helping to design, build, and commission a device that measures the position and energy of protons used to attack tumours that other forms of cancer treatment can struggle to treat. Such measurements can help ensure that the tumour is being treated optimally, reducing the risk for patients. To optimise their designs, computer simulations of the experimental setup can be run to investigate the effect of changing various parameters without the need for extensive laboratory testing.

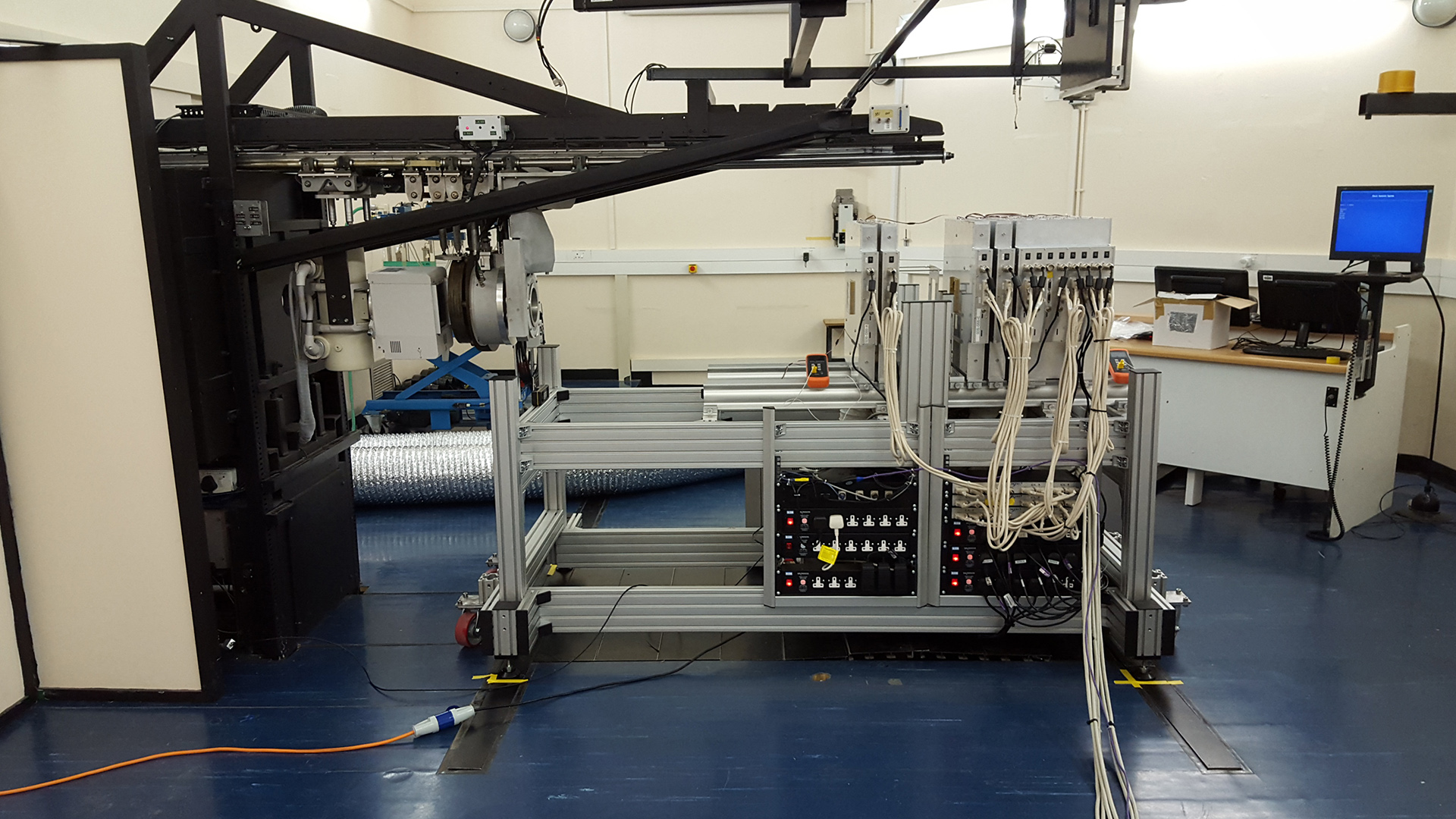

The PRaVDA experimental setup at the iThemba LABS beamline, South Africa. Image credit: T. Price/PRaVDA Consortium 2016.

The problem

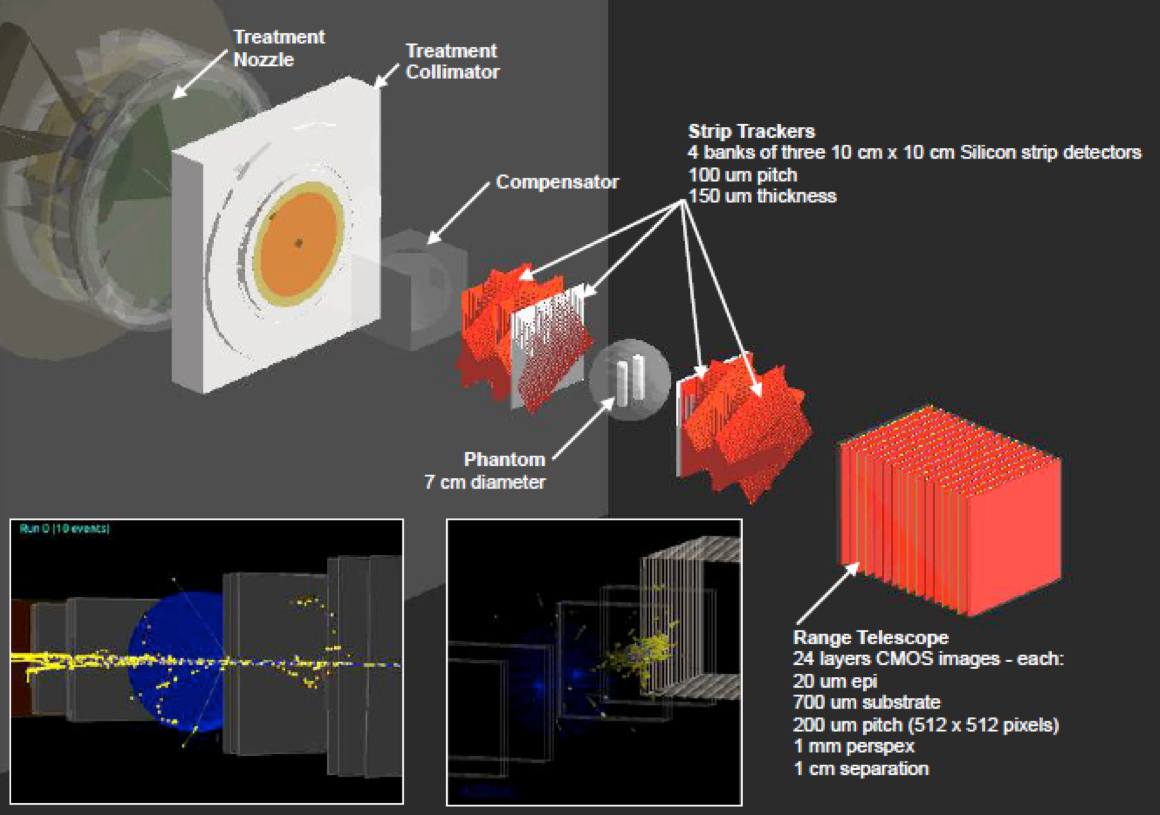

The PRaVDA team’s experimental setup is modelled using the GEANT4-based Super Simulation (SuSi) software suite. Thousands of parameter settings, covering changes to the detectors used (silicon strips and pixels) and their settings, the types and sources of particles, and the group’s novel event reconstruction algorithms, needed to be run with simulations featuring millions of particle interactions. Such a workload proved too much for the university’s local computing cluster, and other UK-based compute resources were not optimised for such a massively-parallelisable task on the scale required by PRaVDA.

A visualisation of the PRaVDA Super Simulation model. Inset: some example proton beam interactions with GEANT4. Image credit: T. Price/PRaVDA 2015.

The solution

GridPP, who regularly handle billions of GEANT4-based simulations for the Large Hadron Collider (LHC) experiments and other particle physics-based projects, were suggested to the PRaVDA team as potential collaborators. After a successful initial meeting to establish the group’s requirements, the PRaVDA SuSi workflow was ported to run on GridPP resources via the GridPP DIRAC service. Simulation jobs were configured and managed using the Ganga interface, and the team’s software was distributed to the grid using the CernVM File System (CVMFS). As a result, the team has been able to move to simulations using five times more particles-per-simulation while reducing total run times from weeks to hours.

What they said

“GridPP has been invaluable to us as a project. There were experts on hand to get me up and running, which was great as time on the project is short. It’s easy to quickly run test jobs, and running on 64 cores at a time has vastly reduced wait times. CVMFS has greatly simplified our software deployment too. A huge thank you to those who have supported us!”

Dr Tony Price, Uni. Birmingham/PRaVDA

Further details

Services used

- Ganga: for grid job submission and management;

- GridPP DIRAC: for multi-site workload and data management;

- CernVM-FS: for software deployment and management.

Supporting GridPP sites

- Birmingham

- Liverpool

Virtual Organisation(s)

Related publications

- T. Price et al., “Expected proton signal sizes in the PRaVDA Range Telescope for proton Computed Tomography“, JINST 10 P05013 (2015)

- G. Poludniowski et al., “Proton radiography and tomography with application to proton therapy“, Br. J. Radiol. 88 20150134 (2015)